Goes into a bit more detail about what some of the pipeline steps do, and how the pipeline accesses the Datastore.Īs part of our new template-ready pipeline definition, we’ll specify that the pipeline takes a (It would be equally straightforward to write results It writes the results to three BigQuery tables. Popular URLs in terms of their count, and then derives relevant word co-occurrences using an approximation to a tf*idf It finds the most popular words in terms of the percentage of tweets they were found in, calculates the most It reads recent tweets from the past N days from Cloud Datastore, then splits into three processing branches. You can see the pipeline definition here. It with the -template_location flag, which causes the template to be compiled and stored at the given We do this by building a pipeline and then The first step in building our app is creating a Dataflow template. Detecting important word co-occurrences in tweets Defining a parameterized Dataflow pipeline and creating a template The pipeline does several sorts of analysis on the tweet data for example, it identifies important word co-occurrences in the tweets, based on a variant of the tf*idf metric.

This case, stored tweets fetched periodically from Twitter. The pipeline used in this example is nearly the same as that described in the We’ll use that feature in this example too. Because we’re now simply calling anĪPI, and no longer relying on the gcloud sdk to launch from App Engine, we can build a simpler App Engine To periodically launch a Dataflow templated job from GAE.

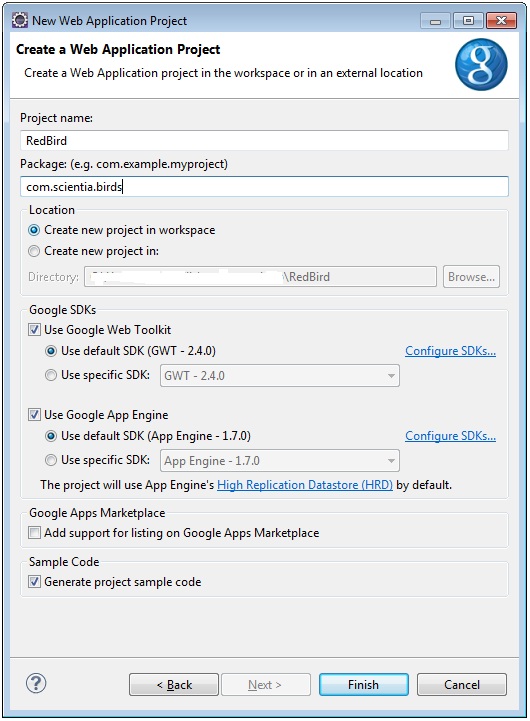

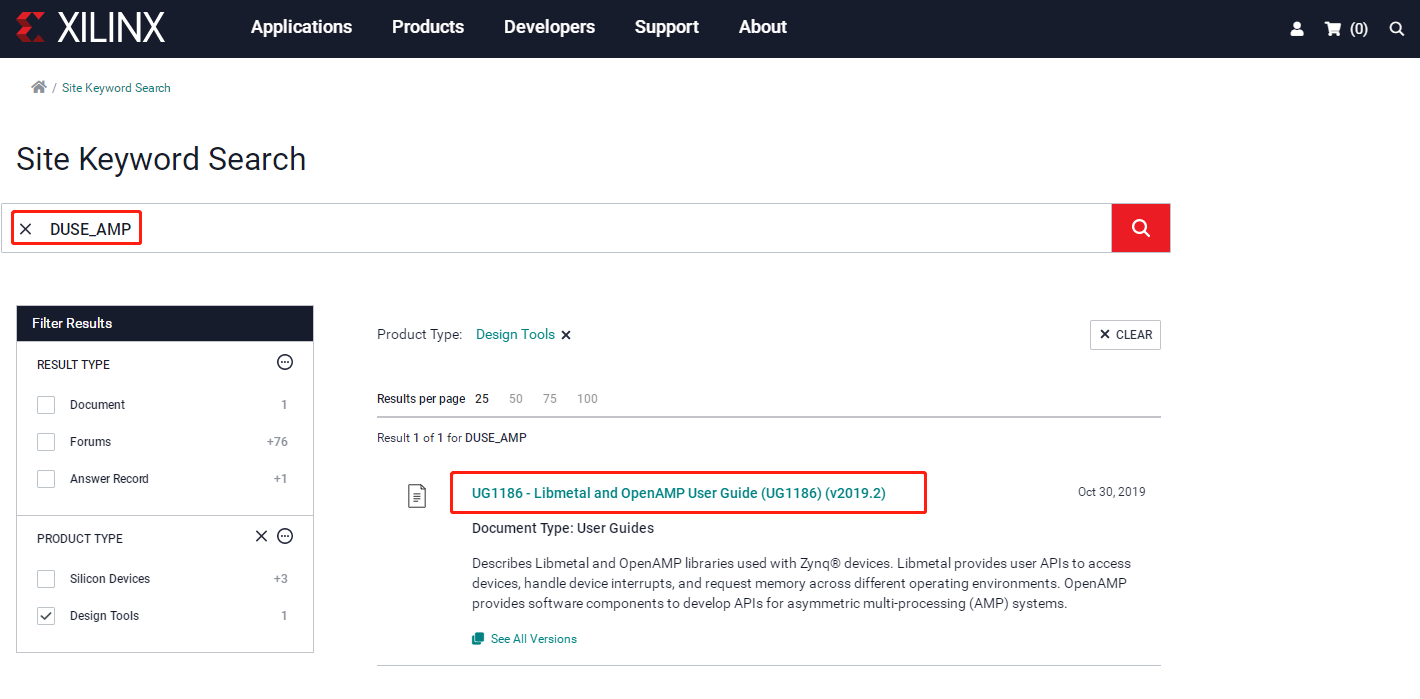

#Google app engine sdk compiler flags how to

In this post, we’ll show how to use the Dataflow job template The Google Cloud Platform Console, the gcloud command-line interface, or the REST API. It’s much easier for non-technical users to launch pipelines using templates.This means that you don’t need to launch your pipeline from a development environment or worry about dependencies.With templates, you don’t have to recompile your code every time you execute a pipeline.

Google Cloud Storage and execute them from a variety of environments. Dataflow templates allow you to stage your pipelines on Since then, Cloud Dataflow templates have come into the

#Google app engine sdk compiler flags install

To install the gcloud sdk in the instance container(s) in order to launch the pipelines. The use of GAE Flex was necessary at the time, because we needed To periodically launch a Python Dataflow pipeline. This post builds on a previous post, which Jobs and many other data processing and analysis tasks. To easily launch Dataflow pipelines from a Google App Engine (GAE) app, This post describes how to use Cloud Dataflow job templates Using Cloud Dataflow pipeline templates from App Engine

0 kommentar(er)

0 kommentar(er)